Trusted by accountants and firm marketers across the UK.

155%

increase in search visibility

119%

increase in organic traffic

30%

increase in organic leads

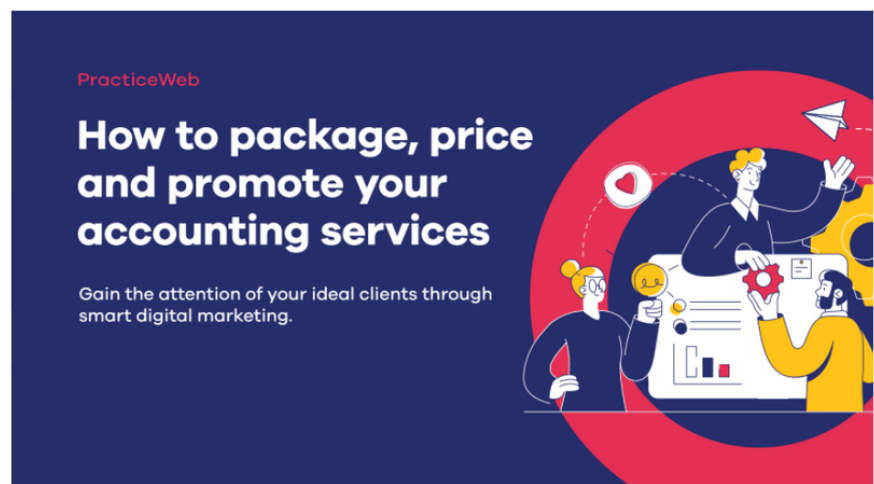

Latest Guide

How to package, price and promote your accounting services

Starting a new service is a great way to expand your revenue and enhance your proposition in the market. We’ve produced an easy-to-follow guide to help you gain the attention of your ideal clients.

How to submit award winning award entries

Submitting an entry for an award can be both exciting and daunting. However, the ambition is universal: to win. Success can provide not just accolades but also a platform for further opportunities.

In this blog, we go through what you should consider when submitting an award entry and how to maximise the possibility of your success.

The role of AI in modern accounting

AI has transformed numerous industries, with accounting being no exception. Explore with us the role of AI in modern accounting.

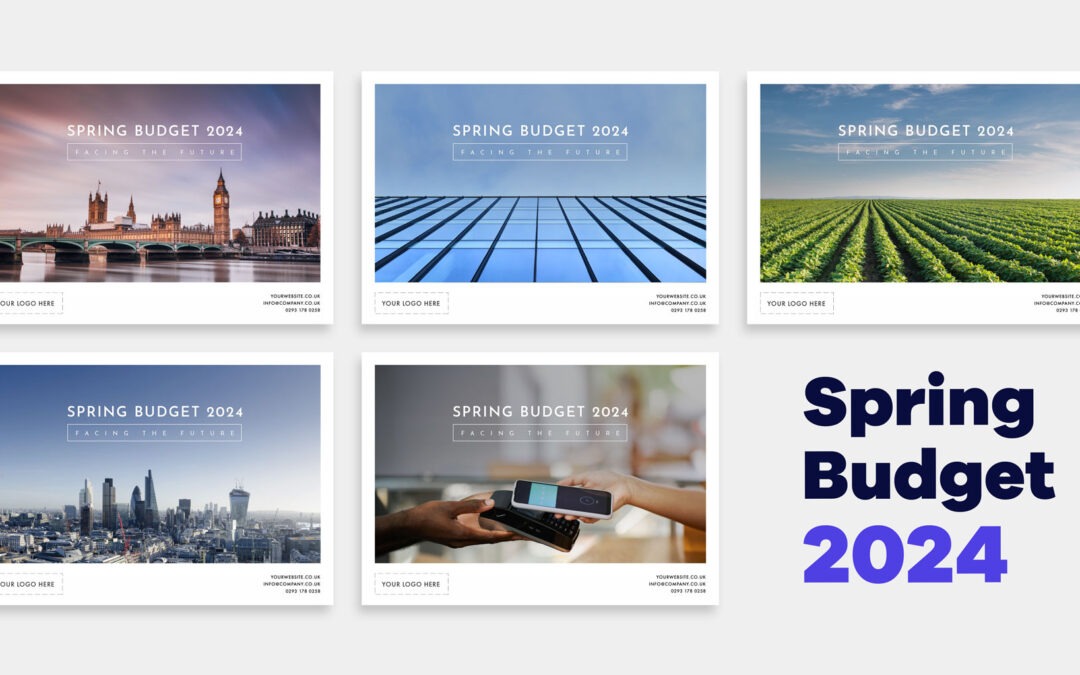

Spring Budget 2024: How to use your report

Our Spring Budget 2024 sums up all the key announcements from 6 March. Find out how to make the most of it – on your website, over email or a printout.

How accountants should be marketing themselves in 2024

The way accountants are marketing themselves has evolved. It’s not just about numbers anymore; it’s about making those numbers work in the digital space.

Sign up to our newsletter

Our monthly newsletter is packed full of marketing tips, tricks, advice and insight to help you grow your practice through the power of marketing.

Kick off your growth journey

If you’d like a jargon free call with one of our experts we can give you some helpful advice on what you might need to start growing your brand.

Drop us an email

Kick off your growth journey

If you’d like a jargon free call with one of our experts we can give you some helpful advice on what you might need to start growing your brand.

Give Zoe a call on